Welcome to The Cusp: cutting-edge AI news (and its implications) explained in simple English.

In this week's issue:

- Tech layoffs are forcing programmers out of work. And with AI coding assistants now writing > 30% of code, humans won't be hired back.

- A useful mental model for you to understand how AI works quickly: AI as compression.

- A couple of neat changes to this newsletter.

Let's dive in.

1: Coding assistants reduce the average amount of code humans need to write by > 30%

Remember Github Copilot? The "pair programmer in your pocket" that was introduced by Microsoft last year?

At first, Copilot was little more than an AI-powered code completion widget. But since then, it's been iterated upon and improved at a rapid pace.

Today, it not only completes your code, but writes new functions and documents files seamlessly – all while taking into account the context of your codebase as a whole.

Shockingly: for many programming languages, over 30% of newly written code is now generated by AI assistants like Copilot.

Put another way: that's 30% of code not being written by humans.

Tech layoffs are accelerating the job displacement

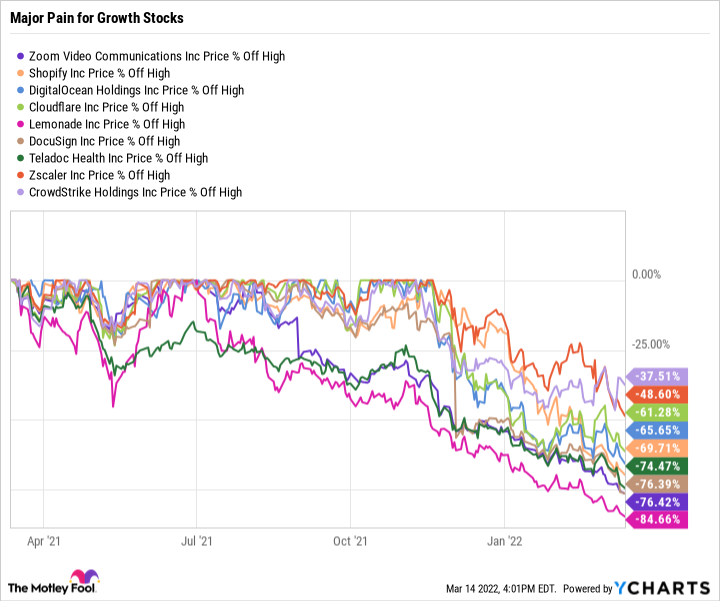

Advances in AI programming come at a particularly devastating time, since we're also seeing mass tech layoffs across the industry.

Companies are shedding jobs in droves (mostly due to COVID-19 induced economic uncertainty). In the last month alone:

- Spotify fired 5% of their staff.

- DocuSign let go of 9% of their workforce.

- Microsoft fired over 1,000 employees.

- Facebook instituted its first hiring freeze since the company started in 2004.

We're probably only seeing the beginning. And when you combine this with the rise of programming automation tools like Copilot, it's not hard to see the future of software engineering as bleak (for humans, at least).

What's this mean for us?

Whether or not the economy recovers quickly:

- With more programmers laid off, and more AI-generated code, the role of the human programmer will continue to diminish.

- The trend towards AI programming is only going to accelerate with larger, Chinchilla-like models soon to become the standard.

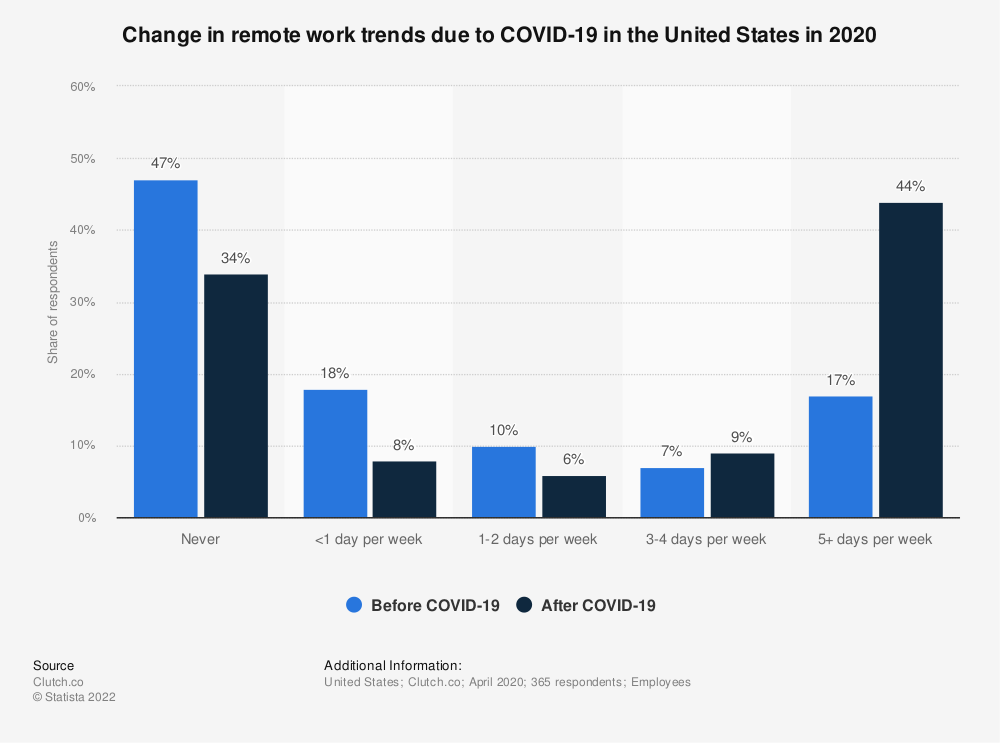

Just like "remote work" exploded in popularity during COVID-19 (and only marginally reduced afterward), many companies simply won't hire back programmers once they're gone.

They won't need to: their remaining staff will pick up the slack with AI, until there's no need for them, too.

How can we take advantage of this?

If you're in tech, know this: junior programmers will be the first to go.

It'll be more cost-effective to outfit skilled seniors with AI assistants and increase expectations around volume than invest time and energy into newbies. Consider:

- Senior roles more naturally understand how to prompt the AI.

- They also have more of an ability to verify whether AI-generated code is good or not.

- Senior programmers better understand how each module or function fits into the larger context – so they won't be limited to piecemeal sections (like juniors would).

Given the above, here's how to future-proof yourself:

- Are you a programmer? Choose positions where you work on complex codebases with many interlinked dependencies.

- Get into management or mentorship as soon as possible.

- Are you a founder/manager? Invest in a small number of star programmers rather than a large number of mediocre ones. The former will let you take full advantage of the coming AI coding boom.

2: AI as compression

AI is extremely complicated. That's why some of the smartest people in the world work on it – they need those smarts, or they wouldn't be able to meaningfully contribute to its growth.

But most of us don't care about spearheading the theoretical underpinnings of AI. We just want to be able to do cool things with it. Luckily, you don't need a PhD in rocket-science for that.

Instead, all you have to do is internalize a few effective mental models around how AI works. And one such method that's been gaining popularity with non-technical audiences is AI as compression.

AI as compression is a simple, straightforward way to understand a ton of recent advances in artificial intelligence, and it takes just a few minutes to learn. Let me show you.

Ever unzipped a file?

What really goes on under the hood when you zip or unzip a file? A simplified explanation might be:

- Files are stored as long streams of numbers and symbols.

- Sometimes, there are patterns in these numbers. For instance, you might have a sequence that looks like this: 8344444444493.

- Let's say it takes one "unit" of information to store each number. Taken this way, the above number would require 13 units of memory (since it's 13 numbers long).

- But a lot of that information is redundant. The number 4, for instance, repeats 9 times. Couldn't we represent that information better?

- Of course! If you add a symbol like * that represents the number of times something is repeated, you can get away with storing that entire number in only 7 units – almost a 50% decrease in size!

- What would it look like in practice? 834*993. The 4 with a *9 after it is taken to mean "4 repeated 9 times".

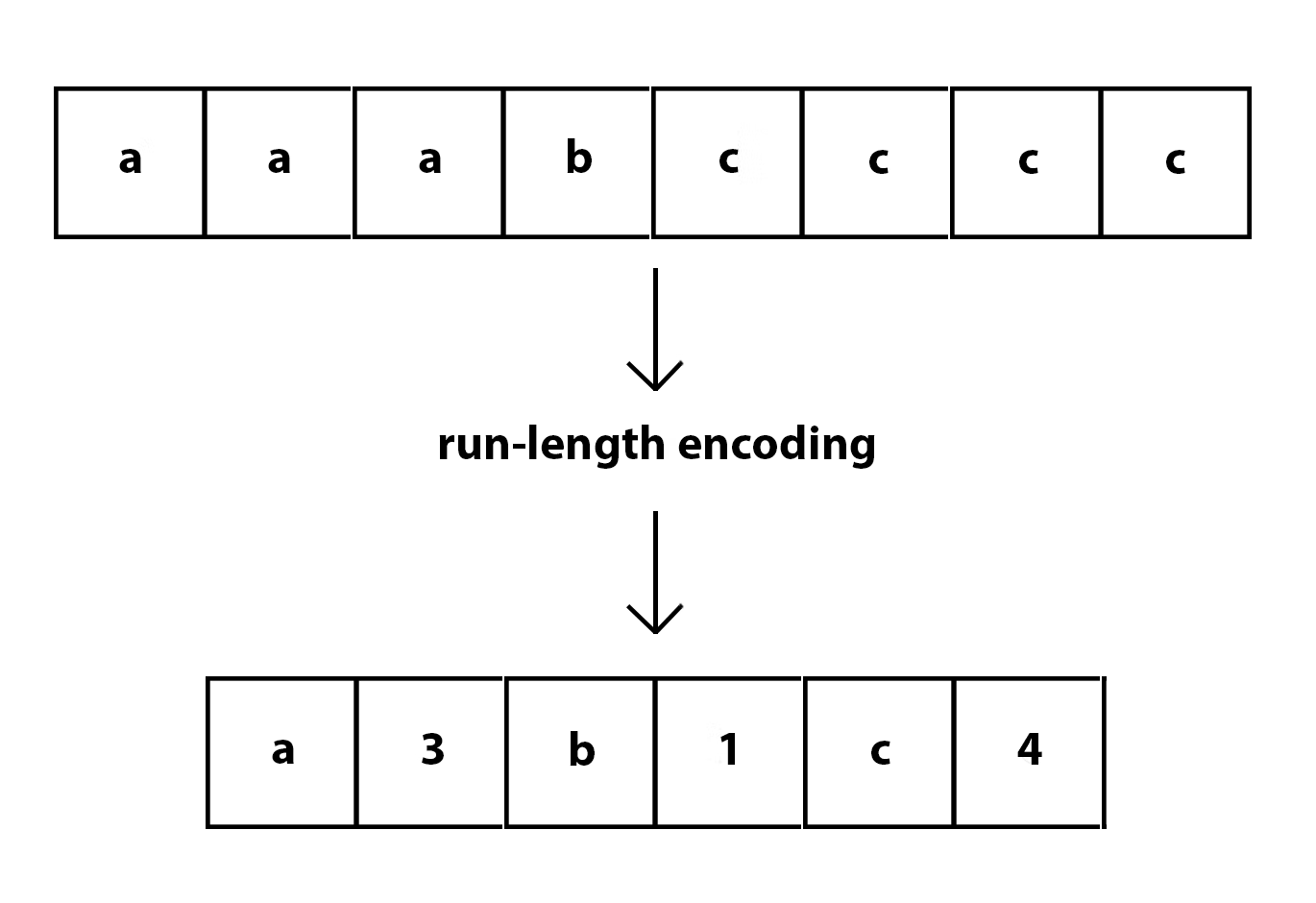

The above algorithm actually has a name: run-length encoding (RLE). It works by taking long runs of repeated bits and encoding them as single values instead of their actual long representations.

Your computer uses RLE along with dozens of similar algorithms to help compress and decompress data. To put it simply: it finds patterns, which it then uses to represent the information using less storage space. And when you want to make the file bigger, you just reverse those patterns.

AI works in a similar way

Turns out AI does something extremely similar.

Ever used an image upscaling AI? (if not, visit this link, spend a minute, and then come back when you're done).

In short: image upscaling models take a small, blurry image as input, and send back a big, high-quality image as output.

At a fundamental level, AI is doing something similar to "unzipping" that image. It pretends your image is already compressed, and then finds patterns (like repetitions, if you want to think about it in terms of run-lenght encoding) to decompress it.

The main difference? These patterns are learned organically through training instead of being explicitly programmed by a software engineer. And it makes sense: run-length encoding is an easy enough algorithm to write (if a number occurs more often than once, count it and replace it with *x), but "darken the pixels between a human's teeth just enough so that they're distinguishable" is... a little more difficult.

What about text generators?

Remarkably, text generation algorithms like GPT-3 do the exact same thing.

Your prompt? It's the same thing as the "compressed" file before unzipping. The model uses the knowledge it gained during training to analyze it, look for patterns, and then spit out the final version – your "uncompressed" text completion.

Changes to this newsletter

Lastly, you've probably already noticed, but this issue had some big changes. Namely:

- Name has changed from Nick Saraev (how conceited!) to The Cusp.

- Language is now simpler & more to the point. Most of you are executives, programmers, or businesspeople; I want to improve information density and maximize your ROI.

- We've clarified the focus of this newsletter: The Cusp will cover real-world consequences and applications of AI, and be published every Wednesday.

With well over a thousand readers, applications & consequences are by far the two most frequently requested topics. You all (understandably) want to know what the future will look like, how you'll fit into it, and whether there are opportunities you can take advantage of. This newsletter will provide all three.

AI has traversed an immense distance in just the last few years. And there's also more information on the subject than ever. But the average quality of that information has plummeted – more noise, less signal. I aim for this newsletter to be a beacon of light in a stormy sea of clickbait and subpar discourse.

Enjoyed this? Consider sharing with someone you know. And if you're reading this because someone you know sent you this, get the next newsletter by signing up here.

See you next week.

– Nick