It's no exaggeration to say that artificial intelligence is having sweeping impacts on our world. From the way consumers interact with technology, to the increasingly AI-driven basis in which most businesses are operating today, artificial intelligence is everywhere.

It's also only going to become more pervasive in the coming years, as large companies like Amazon and Google continue their veritable space race to dominate the AI market.

Because of its massive social and cultural relevance, it's worth learning a little bit about it. That's what we're going to do in this article - tread through a few basic AI concepts to make your next discussion (or business project) more likely to succeed.

Artificial intelligence: what is it?

To begin our discussion on basic AI concepts, let's first define artificial intelligence.

Put simply, AI over the last sixty years has meant making our computers think more like humans. People are dynamic, flexible, and creative; computers, on the other hand, are incapable of doing more than following a set of very specific instructions. We want our systems to be more of the former and less of the latter.

With modern statistical techniques, this is possible. Today's neural network based AI is inspired by, and in some respects already replicates, certain functions of the human brain. Though still limited in their capacity, such nascent AI is definitive proof that we're moving towards flexible, autonomous systems.

Types of artificial intelligence

The age-old adage of a walking, talking, feeling robot à la 'The Jetsons' is, unfortunately, still a ways away. Instead, artificial intelligence engineers, until now, have spent the bulk of their time focusing on building AI models that can perform very well within a hyper-defined, small field or niche.

Such artificial intelligences are termed narrow AI, because they are good at doing only a few specific things. Understanding basic AI concepts like narrow AI is crucial if you want to be able to make sense out of the next decade, so we're going to talk a little bit about it.

Narrow artificial intelligence

The artificial intelligences of today do certain things very well and other things not at all; i.e, their utility is narrow. But don't be fooled into thinking that narrow means not impressive - today's narrow AI is responsible for the vast majority of human dealings on the Internet, by syndicating, curating, and monitoring content on social media platforms, and some could say it's already driving our culture.

Other narrow artificial intelligences already in use include computer vision models, which already outperform human beings at a variety of recognition and identification tasks using digital images or videos. We've also created narrow game models, narrow language processing models, narrow generative models, and more.

Though these narrow artificial intelligences can certainly best a human in the activity they were specifically designed to do, they often struggle when faced with tasks that are even slightly different to the ones they were originally trained for. So despite their incredible performance on object recognition tasks, for example, a computer vision model can't (yet) write, talk, speak, or develop software, because this was not explicitly trained for.

General artificial intelligence

Narrow artificial intelligence stands in contrast with its significantly more formidable cousin: general artificial intelligence (AGI). AGI is the stuff of science fiction, and is characterized by intelligence that is not narrowly focused on a specific task, but can instead handle a wide range of cognitive functions similar to (or better than) humans. This is one of the most important basic AI concepts to understand because of its catastrophic potential impact.

In theory, general AI would be capable of outperforming humans in just about every intellectual endeavor - including activities that are currently seen as the exclusive domain of human beings, such as creativity, strategic thinking, and social skills.

This is for several reasons. The biggest may be that human neural impulses are limited to travelling at ~100 meters per second, whereas the speed of conduction in an artificial mind is closer to 300,000,000 meters per second (the speed of light).

That's a 3,000,000x speedup alone; meaning that if an AGI was theoretically just as smart as a human (and its mind was built in a similar way to ours), it would still be capable of accomplishing tasks close to three million times faster. A thousand or two human-level artificial intelligences could replace the economic value of our entire planet.

Another is that biological evolution may have left a fair amount on the table, optimization-wise. There are good arguments to the contrary, but many believe that gradient descent, the main optimization algorithm employed by most statistical machine learning models today, probably optimizes neuron weights more efficiently than the way human brains do.

Which naturally leads to...

The Singularity

Many prominent scientists believe such an AGI will be developed in the next few decades, and that it will have a devastating (or liberating) effect on humankind, going as far as to dub it the Singularity.

Perhaps the most apocryphal of basic AI concepts, the Singularity is the notion that once artificial intelligence surpasses human intelligence, it will rapidly evolve and improve at an accelerating rate, eventually leading to a point where humans will no longer be able to understand or control it.

Some proponents of the singularity believe this event will mark the end of humanity as we know it, while others see it as an opportunity for us to achieve a state of unimaginable prosperity and technological achievement.

If you assume a sufficiently rapid rate of technological improvement, it's not hard to see how a singularity could come about. In fact, some people believe it's already happening - with exponential increases in computing power and data storage, artificial intelligence is rapidly getting better at learning and problem solving.

AI is already writing code, learning to run, and more - the gap between human and artificial intelligence is rapidly shrinking, and will continue to do so over the coming decades.

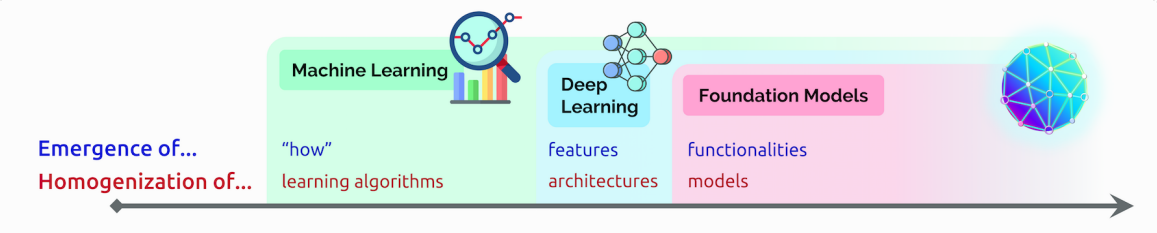

Where AI progress is today: foundation models

As we grow further into the digital age, models are growing less and less narrow. This has necessitated the invention of a new basic AI concept: the foundation model.

Foundation models are chiefly distinguished by their adaptability; whereas narrow AI is restricted to one or a small set of very specific tasks, foundation models are beginning to achieve multimodality: the ability to understand a diverse medium of data (like text, images, and video simultaneously) rather than just the one it was explicitly encoded for.

The most recent (and perhaps impactful) development of the sort is in natural language processing, a subfield of AI that deals with teaching computers how to understand human language.

GPT-3, a model released by OpenAI in late 2019, was initially trained for a single task - understanding and generating text. However, after being released to the Internet, users quickly found that it far exceeded its initial training set; GPT-3 can do math, understand logic, and, with some tweaks, even create images with a high degree of fluency. Not to mention its original task - text generation - is oftentimes indistinguishable from human writing.

To illustrate this, I wrote this article, in part, using GPT-3. It excels at explaining straightforward topics in an easy-to-understand way, and though there are (at times) factual inaccuracies, it significantly improves the speed at which one can create.

You can liken the usage of such a tool to the power loom during the industrial revolution - the efficiency of a single weaver (or writer) is instantly multiplied by a dozen or more times. Instead of having to do the grunt work, you're involved more in steering the result where you want it to go. Over the next ten to twenty years, it's suspected that many large industries (driving, advertising, and marketing, to name a few) will follow suit, and this will have significant impacts on the labor market.

The mathematics of artificial intelligence

Far from a basic AI concept, the mathematics that underpin artificial intelligence are diverse and complex. Though beyond the scope of this article, it's important to understand that AI math really consists of a wide range of concepts and tools, from linear algebra, to probability and statistics, to game theory, and more. We'll cover the first two - linear algebra and statistics - in brief below.

Linear algebra

Linear algebra is important for AI because it provides a way to model relationships between data points. In particular, linear algebra allows agents to represent data in terms of vectors and matrices, and extend this notion to higher dimensions.

Matrices are at the crux of how agents identify patterns and relationships in data. Almost all machine learning can reasonably be described as matrix operations - the Transformer architecture underlying GPT-3, for example, is nothing but large matrices multiplied together several times to produce another matrix that describes a sentence.

That said, linear algebra can do very little without statistics & probability. Because the way your algorithm is trained, i.e, statistically optimized, is at the root of how models learn.

Probability and statistics

Probability and statistics are essential artificial intelligence basis to understand. Any AI system that needs to make decisions based on data, and then update based on those decisions, heavily relies on both concepts.

Probability provides a way to quantify uncertainty, while statistics allows agents to analyze data and draw conclusions from it - even data that may not traditionally seem 'analyzable', like words, images, or sounds.

In particular, two concepts in statistics are important to understand: optimization and Bayes' theorem. Optimization refers to the notion of iteratively improving an estimate through the use of approximation methods like gradient descent.

Bayes' theorem is a way of applying probability to conditional statements. This is important for AI because it allows agents to update their beliefs about the world as they receive new information.

Optimization and Bayes' theorem both work together to provide systems with a way of moving in the right direction, so to speak. You get 'closer' to the desired result by moving in a direction, calculating your distance to the end result, and then moving again in a way that makes that distance smaller.

Basic AI concepts: where do I go from here?

At this point, you should have a reasonable understanding of some of the core basic AI concepts, including narrow AI, AGI, the Singularity, and the mathematics required to do AI. If you're interested in learning more, I'd recommend checking out some of the following resources:

- The 100 Page Machine Learning Book by Andriy Burkov: This online resource provides concise explanations of a wide range of artificial intelligence basics, and is pivotal if you wish to develop an understanding of the statistical side of AI; i.e machine learning.

- AI for Everyone: Though non-technical in nature, free online course from Andrew Ng provides a more comprehensive introduction to many of the basic AI concepts we've discussed here.

- This blog: Over the coming weeks and months, I'm going to be creating in-depth resources on how to get up and running with AI (moreso than the basic AI concepts covered here). We'll learn how to create AI artwork, how AI text generation works, why AI deepfakes aren't that big of a deal, and more, and I'm going to keep it palatable to a wide audience while also offering the opportunity for people to go deeper if interested.