With artificial intelligence growing increasingly capable, humans will soon see a drastic change in their socio-economic status.

Models like PaLM and DALL-E 2 will disrupt programming, writing, advertising, art, and design globally - markets estimated at trillion of dollars each.

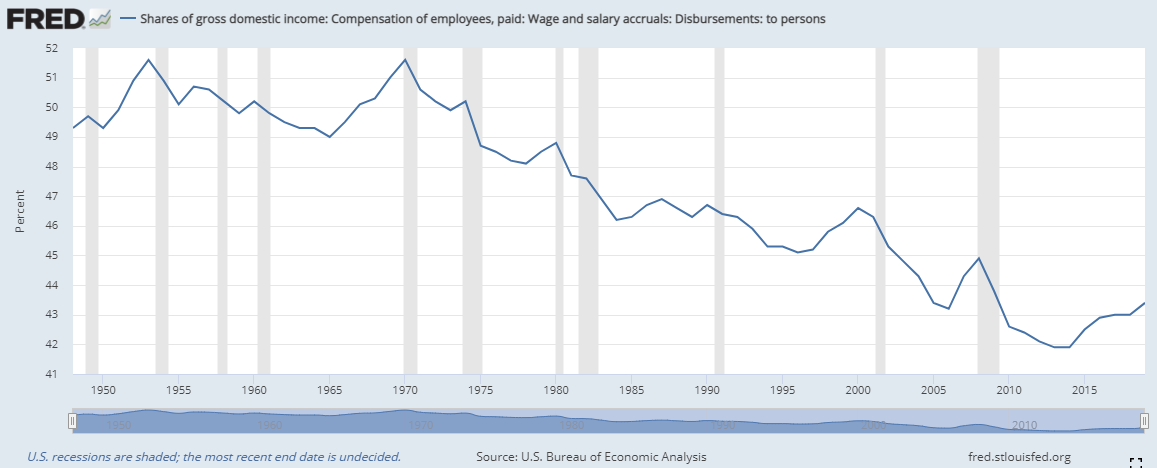

Over the next few years, this will undoubtedly lead to a stark redistribution of wealth from the middle to the upper class, alongside a rise in white-collar unemployment. Whoever owns these models will control large portions of the economy.

What can we glean from these insights? How can we stay ahead? In an effort to predict the socio-economic impact of artificial intelligence, I'll outline two plausible futures in this post:

- We let corporate artificial intelligence research continue in an unbridled fashion,

- We enact strong government regulation now to attempt to control the upcoming disruption,

I'll also cover universal basic income, why projections like this can be naive, and a few economic trends that will help us better understand the future.

Future 1: Unbridled Free Market Growth

Without strict regulation, artificial intelligence will grow to be the dominant choice for any knowledge-based industry, simply because of profitability. Once built, AI is orders of magnitude less expensive to implement, better than human intelligence, and capable of running 24/7 (whereas humans still need to eat, sleep, and kill time around the water cooler).

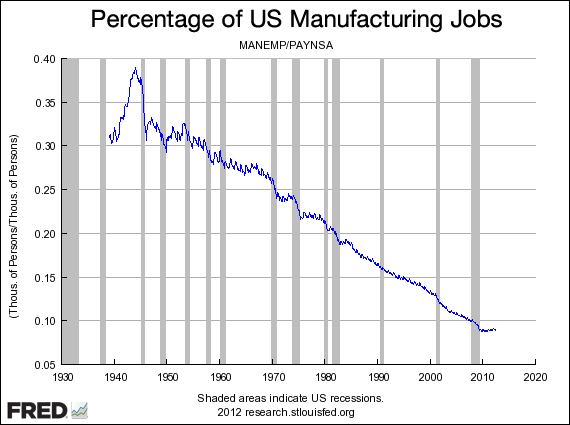

Over the next ten years, nearly every knowledge-based company on Earth - including software development firms, creative agencies, publishing houses, and media conglomerates - will shift to producing most of their output with the help of AI.

It won't be an all-or-nothing switch; rather, the ratio of AI work to human work will increase steadily over time, and market economics will lead to a gradual reduction in the compensation of most lower-level human employees that will eventually become unsustainable.

At the same time, a select subset of workers - primarily those in higher, executive positions - will be paid more, as keen decision making skills that integrate multiple diverse knowledge fields will be essential to the management of ever-larger fleets of AI resources. Nothing puts a premium on quality like quantity, after all.

Mass unemployment

By 2040, a large chunk of Western white-collar workers, who have long held themselves in high esteem because of their intelligence and technological acumen, will be out of work. They'll be forced to look for lower-paid, manual labor positions that have yet to be automated, like logistically complex construction jobs.

The growing number of 'unskilled' workers will lead to lower compensation, significantly increasing the wealth divide. This will lead to social and public unrest, pressuring world governments to regulate the use of artificial intelligence as unemployment rates soar. Democracies all over the world will look for 'quick fixes', and elections of strong-armed, populist leaders will result.

Death & destruction

Bad decisions will follow. Unrest manifests in different ways, and many of them will not be immediately associable to AI's effect on the economy. Political infighting, spikes in crime - perhaps even civil war in some nations, brought on by extreme anti-rich sentiment.

At the same time, strong lobbying by artificial intelligence companies, like the Googles, Facebooks, and Amazons of tomorrow (or, also likely, those exact same companies) will delay the inevitable. But eventually, mounting protests, riots, and crime will eventually force the government's hand.

Instead of regulating artificial intelligence, though, which will hamper both progress and the accumulation of capital, those in power will choose to legislate a universal basic income policy to allow the increasing number of AI-displaced humans to live in a relative state of comfort. Hopefully, this will stop the extreme social upheaval.

But real wealth will continue to consolidate in the hands of the few.

Future 2: Strong Regulation In The 2020s

In the mid-2020s, forward-thinking politicians will realize the immense transfer of power that is soon to occur if artificial intelligence is left unchecked. They recognize the capacity AI holds to significantly improve quality of life and material outcomes for most humans, and legislate quickly in an attempt to distribute these gains as equitably as possible.

Their plan is multifaceted. One component is to tax AI-generated outputs at a significantly higher rate than human outputs, at a rate that steadily increases over the next twenty years.

They want to use this fund to create a universal income policy that offsets the humans displaced through increasingly automated work. After a set period of time, (say, 2045) this fund will eventually include everyone.

Alongside other regulations, like restrictions to compute, they begin to act.

Because of the orders-of-magnitude higher margins AI allows for, companies - especially initially - still reap massive profits off of the shift away from human workers.

Global productivity improves, albeit more slowly, and most people still benefit from better products, higher quality entertainment, and an improved quality of life.

At the same time, the increased spending and subsequent inflation counteracts the naturally deflationary quality of extreme technologies like AI, allowing the economy to transition more gently into one that is machine-driven.

There's still pressure, of course - riots, crime, and death occur, as people's lives careen out of balance because of technologies they don't understand or know how to use. But the degree is lessened, because material comforts will have been consistently provided for over the last twenty years and people still feel like they have a choice.

Although there is a wealth divide (and that isn't necessarily a bad thing), ten billion humans on universal basic income blunts the degree to which AI gains are held by a select few.

Caveats to strong regulation

I wrote the second future as a sort of utopian ideal. However, I should make it clear here that 'legislating the problem away' rarely works as intended. It's naive, and although some regulation is probably preferable, the degree to which is still an outstanding question.

Government inefficiency & danger

First, governments are slow, inefficient, and often significantly outperformed by the private sector.

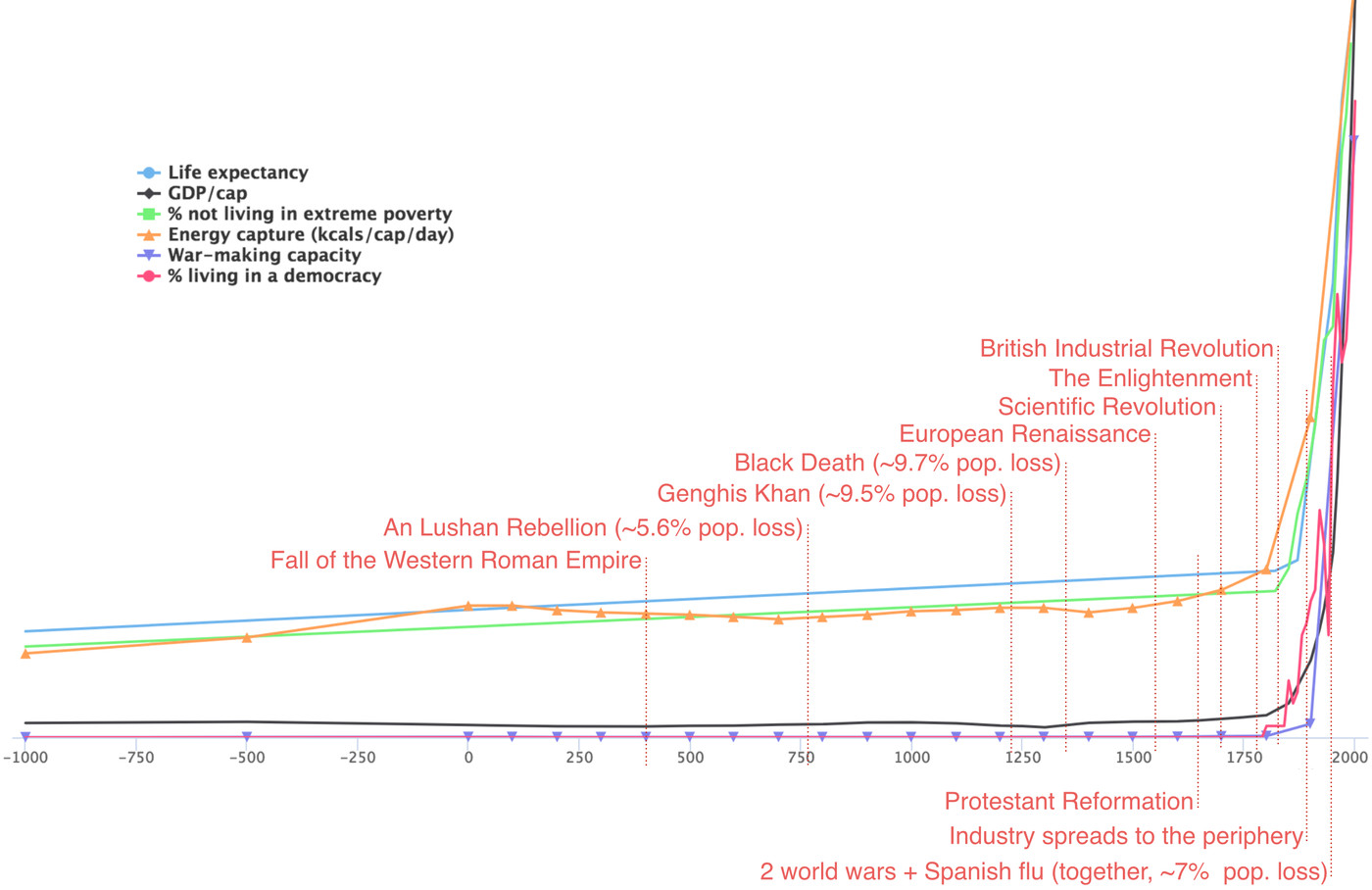

They're also the deadliest institution to ever exist. Governments don't have a good track record: in multiple forms, they have consistently and reliably destroyed the greatest number of human lives throughout history. Giving them the reins on an issue of global importance may be naive.

Politicians and regulators often hold a great degree of self-interest, too. The example above, where a group of anonymous, kind world leaders come together to equitably distribute AI (with little thought on how they might gain personally) may not be all that realistic.

Growing corporate altruism

Likewise, though often painted as impersonal, money-hungry entities, AI companies are growing increasingly altruistic (at least on the surface).

Meta just open-sourced a suite of large language models up to 30B parameters, and are also opening their 175B language model - which performs similarly to GPT-3 - to research requests. Any researcher can download the 350GB model weights and do with them what they want, greatly increasing the transparency of artificial intelligence research.

On the other hand, OpenAI rigorously safety-tests their products before mass market distribution, and their executive team has shifted a great number of their priorities away from growth to AI alignment. Though they've received some criticism on close-sourcing aspects of their language models, how careful they're being with true industry-destroying technologies like DALL-E 2 gives me hope that it's in pursuit of equitable distribution.

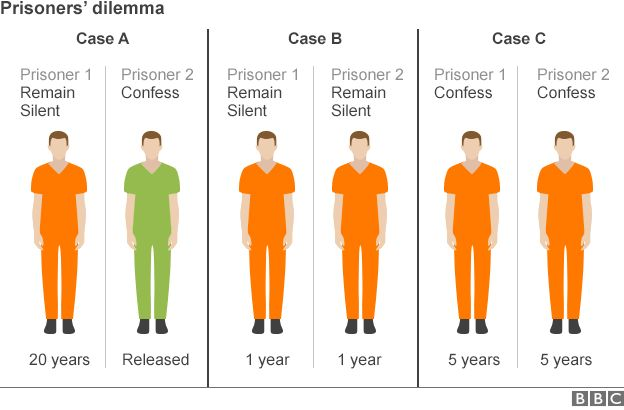

The bad actor dilemma

Let's say every world government comes together and agrees to hamper their own AI research for socio-economic concerns... except for one.

That outlier would receive a massive advantage economically. And you can bet they'd capitalize on it.

This is a formulation of the prisoner's dilemma. Simply put, it's in a nation's best interests to push artificial intelligence research forward as quickly as possible, despite the impact on their (or the rest of the world's) population. This is because of the first-mover advantage: a lead of just a few months in terms of AI progress could result in exponentially-improving gains.

That specific nation's economy might slingshot years ahead, and the order-of-magnitude improvements in economic efficiency could result in a situation akin to American world supremacy after they developed the atom bomb.

Final thoughts: the socio-economic impact of artificial intelligence

That takes us back to the present.

The socio-economic impact of artificial intelligence may still sound like science-fiction, but the majority of you will have it occur in your lifetime. As such, you should understand it, prepare for it, and help others do so as well.

My take? Though it would certainly be rife with implementation issues, any plan would be better than no plan, especially when it comes to technologies of this magnitude.

However, note that hampering AI growth would also prolong many aspects of human suffering. How much faster could we solve world hunger, or push the frontiers of habitable space research, for example, if artificial intelligence development was taken to its logical conclusion?

Either way, I'm not sure what the right choice is. But it's being made as we speak.