The recent LaMDA outcry reinforces something that's been rattling around in my brain for some time: most experts have no idea what they're talking about.

For those not in-the-know, a Google engineer recently interviewed one of their large language models behind closed doors. This engineer, Blake Lemoine, was extremely surprised at the eloquence and coherence with which LaMDA replied to him, and quickly convinced himself it was a sentient person deserving of rights.

LaMDA is certainly impressive, and it may very well have properties of sentience (as I discuss below). But first, I want to talk about the response to Blake's claim, and why the ensuing outrage is indicative of widespread delusion amongst the AI community.

The response

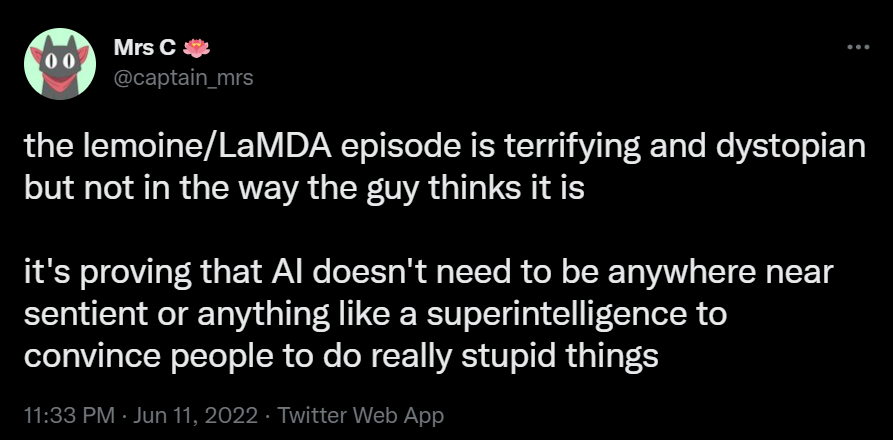

Immediately after his suggestion, Blake received a veritable venti-sized dose of admonishment, belittlement, and patronizing. To even suggest that LLMs were in any way capable of subjective experience was out of the question.

The general consensus? It's clear LaMDA is nothing more than a large statistical process: a city-sized collection of dominos that fall deterministically one after the other.

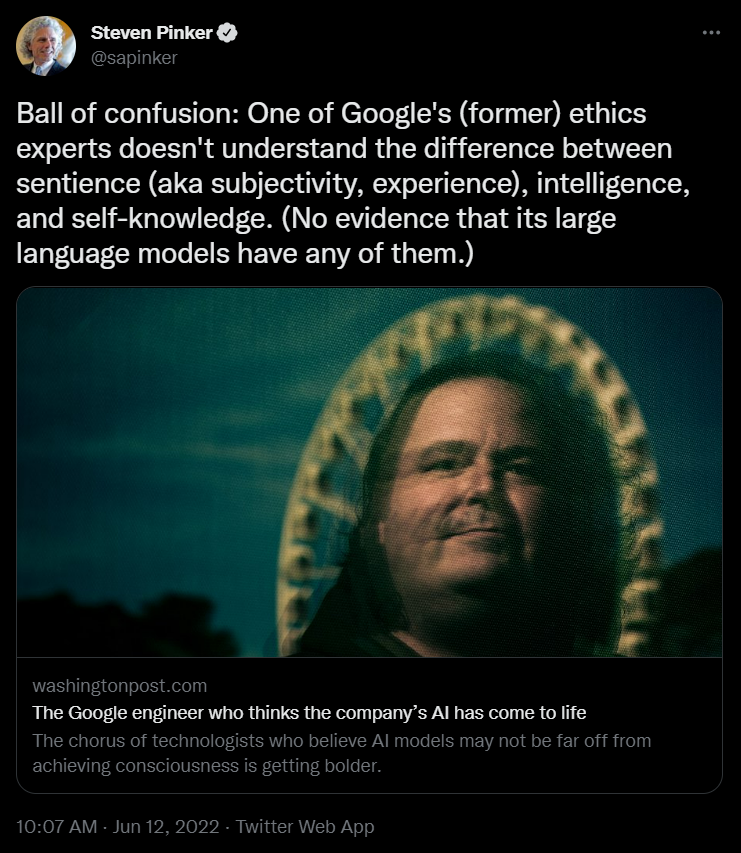

But they took it even further; here's Steven Pinker, one of the world's foremost cognitive scientists, claiming that LLMs don't even possess intelligence, let alone subjective experience.

The confidence with which each of these claims was made was baffling—mostly because none of them included evidence, and few had any follow-up aside from "of course it's not true".

I'm not convinced the experts know what they're talking about. Nor am I convinced they're approaching this problem from a perspective that integrates what we know about machine learning and biology more generally.

As someone with a background in neuroscience, I think it's perfectly reasonable to ask whether LaMDA is sentient. Here's why.

LLMs and biological neural nets

Large language models really do approximate the way neurons work, at least at the interface level. They don't model subcellular processes, but arguably the most important part of neuronal function—the action potential threshold—is modeled by way of a simple nonlinear function.

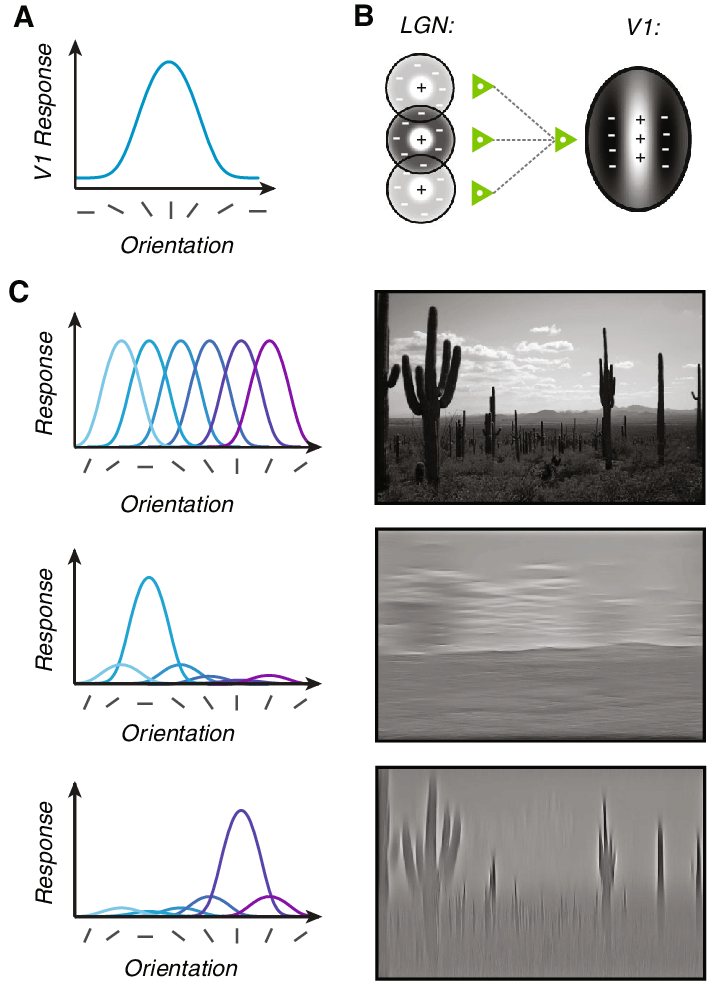

At present, I'd liken the morphology of LLMs to that of specialized cortical columns—like the ones used to build feature sets out of visual stimuli in the occipital cortex.

How sentience might arise in LLMs

From here, we have the following:

- Mammalian brains are made of biological neurons,

- Mammalian brains are the only things in the universe that we currently recognize as capable of sentience, and

- Artificial neurons approximate biological neurons,

So why would it be strange, at all, to ask the natural question of whether "artificial brains" are also capable of sentience? And if so, at what level it would occur?

How sentience might not arise in LLMs

Of course, biological and artificial neurons are not exactly the same, and no discussion would be fair without pointing this out. Here are the main reasons why LaMDA might not be sentient:

- Biological brains self-modify in response to new information. In short, they're "online" learners. At present, most LLMs do not: they are trained once and then frozen for inference. It's possible that this could be one of the prerequisites for sentience—the ability to update neuronal weights as time passes. If this were the case, LaMDA would be frozen in time, and thus not possess subjective experience. Reinforcement learning models that shuttle information to-and-from LaMDA would presumably solve this.

- Biological neurons have a wealth of subcellular and quantum processes that artificial ones do not model. Billions of molecules flow in and out of cells every second, and they possess many distinct organelles which contribute to their function. It's possible that these subcellular processes may be necessary for sentience (though I don't consider this likely).

But the important thing is I am not simply stating "it cannot be so". There are many similarities between artificial and biological brains, and it's not a far cry whatsoever to wonder if the former might grow sentient with scale.

Sentience is a neuroscience question, not a machine learning one

At what level of complexity does an insect, or an animal, grow sentient?

To-date, it appears that sentience arises naturally as a byproduct of increasing the number of neurons in a network. Humans are sentient; flies and mosquitoes are not.

Myelination and how different neural modules are connected are likely hyperparameters that influence this threshold, which is why some organisms with higher neuron counts are still believed to be "less" sentient than others (humans are believed to be more sentient than elephants or whales, for instance, even though the latter have higher neuron counts).

Given the clear relationship between scale and sentience, as well as the similarities between biological and artificial neurons, it is entirely fair to ask whether modern LLMs—which are orders of magnitude more complex than anything humanity has ever invented— are growing sentient.

We really don't know - so let's stop pretending that we do

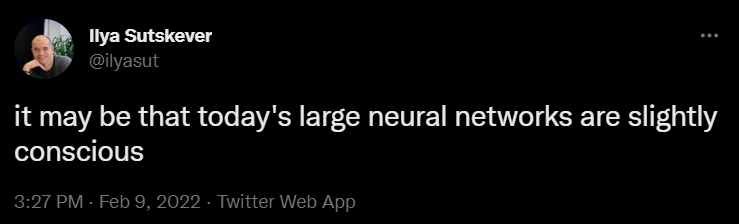

When Ilya Sutskever, the CTO of probably the most cutting-edge artificial intelligence company on Earth, discussed a similar question, he was quickly berated.

But he shouldn't have been. Because there is really, honestly, no way to tell at our current level of knowledge.

It's similar to how some people argue that there is no life in the Universe aside from us. What proof do they really have for their claim?

Absence of evidence is evidence of absence, certainly. But it's not sufficient enough to rule out the prospect of life elsewhere. We simply don't know if life is unique to Earth, or if it might arise on another planet with a similar, but different ecological configuration.

Whether LaMDA is sentient is exactly the same question. We don't know if sentience is unique to higher mammals, or if it might arise on another substrate— like silicon—and we should stop pretending that we do.

Just like other planets and Earth, it seems clear that there are dozens of parallels between artificial neurons and biological ones. Subcellular processes aren't modeled, of course, and neither is time—but does sentience exist at the subcellular level, or is it an emergent property of a large enough neural net?

There are clearly physical laws of the universe we don't understand, and perhaps sentience is one of them. So after generations of consistently being proven wrong, how can people continue to confidently proclaim human exceptionalism?

Let's be honest: we really don't know, and we should start treating it that way.