There are two types of AI that are emerging: agentic, autonomous artificial intelligence and advisory, oracle-style artificial intelligence.

Both are fundamentally different in nature, and will have disparate impacts on human socio-economic status and survival.

Let's explore each below.

Agentic, autonomous artificial intelligence

Agentic artificial intelligence is trained through reinforcement learning (RL). This type of AI learns through trial and error, with incentivized reward functions that move them closer to desired behavior.

You know how humans generally enjoy sweet food? That's because eating sweet food makes our brains signal pleasure, which makes us more likely to seek out sweet food in the future. Think about reward functions in the same way - they give some reward to the AI that it 'likes', making it more likely to repeat whatever behaviors led it to that reward.

RL-trained AI is generally referred to as an agent because it develops its own behaviors as a means of acquiring the reward - whether this is in virtual space, or in the real world, it's responsible for learning about its environment, building an internal representation of the world, and then coming up with a set of actions that reliably solves for its end-goal.

This makes agentic AI well-suited for tasks that are difficult or impossible for humans to do, such as exploring new environments or playing complex games. However, it also means that this type of AI can be very difficult to control and may make decisions that are unexpected or undesirable.

Advisory artificial intelligence

I refer to advisory artificial intelligence as 'oracle-style' because of its similarity to the oracles of old. Rather than involving itself directly in the goings-on of the world, oracle-style AI is a black box: it takes some text input, processes it, and provides text output (that usually answers a question in some way).

Sound like sci-fi? Though nascent, we actually already have instances of small advisory artificial intelligences like GPT-3 or PaLM. Their outputs are constrained to a single modality (text only), and they have no real way to influence society aside from humans reading their outputs. They're also very small compared with what's to come.

At present, we can feed advisory artificial intelligence with a simple prompt, like "Write a letter to my mom as if I were a fifth grader", and it can give us a completely intelligible result. In the future, these prompts will grow exponentially in length, to the point where they're entire books or bodies of knowledge.

Advisory, oracle-style artificial intelligence is not autonomous, and has no physical presence in real (or simulated) worlds. Unlike agentic AI, it doesn't learn "online" - it only really "exists" when we prompt it, and only for a brief moment. Such advisory AI exists solely as large statistical processes that analyze and understand natural language.

The safer option

From a purely safety-conscious perspective, it's better to have an AI that exists only to answer questions when asked, and has no agency or physical presence in the world. We control these AIs by default, and simply asking them questions while keeping them isolated from society at-large can still confer a number of benefits.

But this comes at a cost: real-world impact. By limiting the outputs of extremely advanced artificial intelligence to text, we're creating a bottleneck in their ability to influence the world for good.

At the same time, we're also consolidating power in the hands of the people or organizations that have access to said oracle-style AI. Such technology will almost certainly not be equitably distributed, at least at the beginning.

Example: soon, Google executives will be able to ask their oracles a question like "how do I dominate all of our competition", and then prompt it with a hundred books worth of data on competing company financials and public information. The result would give the company a massive advantage in the marketplace, which it could use to slingshot their organization forward orders of magnitude.

Still, though, such an impact is laughable in comparison to how harmfully effective agentic AI might become.

Agentic AI is fundamentally different. Though it can optimize for a reward, there's no telling how it'll get there - which represents a vast improvement in terms of ability to have real-world impact, as well as a vast increase in potential for disaster. Since they exist in real or simulated spaces, and their behavior isn't mediated - only their reward functions - they're more likely to do something that we don't want them to.

Agentic AI can learn to optimize for any number of objectives on their path to maximizing reward, including those that may be harmful to humans. And while we may be able to control their reward functions before-the-fact, it would be much more difficult to change their objectives or goals once they've been learned.

In short: oracle AI is the safer choice for now, until we have a better understanding of how to control agentic AI.

(Un)foreseen consequences

Though agentic AI is more impressive, and ultimately more in line with what most would probably consider 'artificial intelligence', there are a number of consequences more likely to arise in models that have a sense (though limited) of self.

First, from definitions: autonomous AI trained through RL must be able to affect its surroundings in some way. This is pivotal to how it achieves its goals. Whether in simulated worlds, or the real one, such autonomous AI will be able to take actions to move it into its desired state - think connecting to the Internet, sending emails, or perhaps even downloading itself onto a different server, if necessary.

Though often groaned at, the paperclip maximizer is a good fictional example of this. In short: given an explicit goal, like produce more paperclips, it's left up to the AI how to accomplish it. The paperclip maximizer ends up doing so by downloading and digesting most of the known universe in an attempt to make more paperclips.

Many intelligent people have made strong rebuttals here, and this specific example has been beaten to death, so I won't dwell on it. But there are several paths by which such an agentic AI might proceed to literally destroy humanity (here's a personal favorite), and even if the likelihood of such an event is 0.01%, given the massive downside, it's worth exploring.

From experimental to observational study

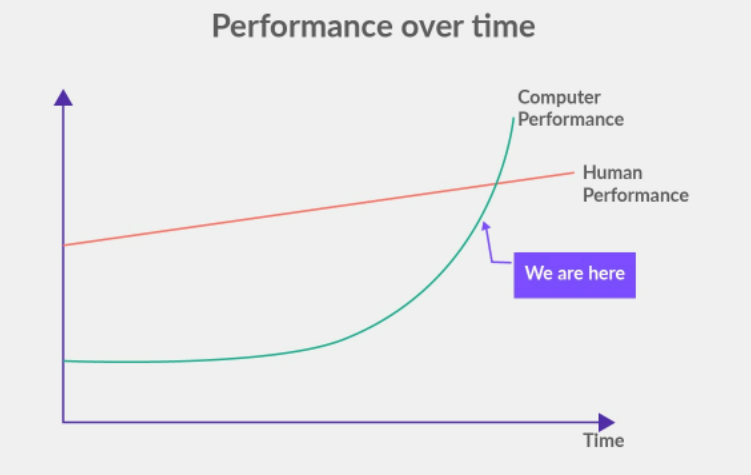

AI trained through reinforcement learning is already developing emergent behaviors that researchers haven't anticipated prior to release.

This has pushed the field away from controlled experiments, and moved it more towards something akin to observational study. Instead of strictly defining operational parameters, large companies are producing very large models, letting them experience billions of hours worth of gameplay or simulated worlds, and then observing what they do.

Check out DeepMind's "Generally capable agents emerge from open-ended play" if you want a concrete example.

This isn't necessarily a bad thing, but it is indicative of how quickly this field is moving. In addition, both because of its nascency and general subject matter, we have little predictive capability here.

It's possible that, sometime in the next decade, an agentic AI model will emerge with proven actions that represent a serious threat as it learns to explore a wider environment - whether it's timestamps of file downloads that are explicitly off-limits, or perhaps a significant display of deception to its human operators.

And I doubt these will be sufficiently reported because of the PR nightmare that would result. Because of this, it's better to be safe than sorry.

A safer, slower future

If we hypothetically instead funneled all of our resources towards improving oracle-style AI (aka large language models), by increasing the context window, size of the model, and training set, we'd quickly be able to ask such an oracle for assistance in aligning agentic AI.

Right now, effective prompts are limited to a few hundred characters. Imagine a future where you could prompt the AI with an entire book - or several books - worth of information on a subject, and get it to output an intelligent response that takes into account all of those factors.

We could prompt it with the entire AI section of Wikipedia, for instance, and then ask it to provide AI safety techniques that have the highest likelihood of success. Researchers could combine their domain-specific acumen with an oracle's incredible research capability to significantly improve the rate at which we develop safe artificial intelligence, paving the path to brighter, less destructive AI.

A thought experiment, as well: one could combine certain features of agentic AI, like its ability to impact the real world (say, with functions that let it browse the web), with advisory artificial intelligence's text-based output, to create a multimodal model that is limited in scope, but that can still provide the best of both worlds. Similar to how Google Assistant works by pairing natural language processing and specific app integrations. This would both provide the illusion of agency, improve its productivity, and let us still hold the reins.

Final thoughts: autonomous artificial intelligence vs oracle-style artificial intelligence

The future depicted in AI science fiction, where an agent can walk, talk, and impact the world, is exciting. But it's also rife with existential danger.

It would be safer, albeit less impactful, for humanity to stick to 'advisory' AI that communicated on a textual basis. More Jarvis in (original) Iron Man, less Cortana in Halo.