From the early days of antiquity, humanity has grappled with the notion of machine thought.

In early Greek myths that predate 500BCE, Talos, a giant automaton made of bronze, would patrol the shores of Europa each day. He had human qualities like emotions and judgements - and this was conceived of over two thousand years ago.

More recently, in 1843, Ada Lovelace speculated that machines:

"Might compose elaborate and scientific pieces of music of any degree of complexity or extent."

That people this far in the past could conceive of the possibilities of machines shows both the depth of their imagination and humanity's inherent fascination with intelligence.

Since then, much of the last two thousand years of technology development has been in pursuit of mechanizing - if not outright solving - the process of thinking.

To answer the question of whether machines can think, I'm first going to take you through a laundry list of current AI capabilities. Some will amaze you, others will frighten you, and most will come as a grave shock to anyone that hasn't kept feverously up-to-date on the latest computer advances.

Then, we'll look at how human capabilities stack up - and what this means for a world where machines are rapidly outpacing us on almost every front.

Can machines write?

I wrote close to 50% of this post with a machine. I fed the title, some opening sentences and a few other bits of structure into a text model, and it autonomously generated several paragraphs for me.

I then edited the output and repeated the process until the article was complete.

If you haven't been keeping up, this will probably make some of you scratch your heads. Machines writing? Isn't that a thing only people can do? How can machines - that deal strictly in ones and zeros, inputs and outputs - do something that seems so distinctly... human?

That was the same logic applied to chess in the pre-1990s. Long considered a distinctly human realm of problem solving (one that required 'pure intelligence'), the rise of computer-based chess programs like Deep Blue was a significant blow to the human intellectual ego.

And from a strictly statistical perspective, writing isn't that different.

- Sentences are like chess sequences: series of moves (words) occur one after another.

- Like chess, there are also rules that define the game (syntax & grammar). Good players make better use of the rules; poor players consistently find themselves flummoxed by them.

Given a sufficiently large set of words (and a ton of computing infrastructure), a machine learning model like GPT-3 can learn the most likely next word for any given sequence and then generate it.

And if it repeats this - if it feeds the results of one cycle back into another cycle - it can write sentences, paragraphs, and even entire articles.

Can machines make art?

If you've read my last few posts, you'll know that machines have already conquered art. There are many approaches that have enabled engineers to do this - the most successful, though, has been diffusion.

Diffusion is a statistical process whereby noise is increasingly added to an image, and a machine learns to reverse that process. I use it to generate all of the artwork at 1SecondPainting.

With massive computing power (and with some clever math), this has given modern AI the ability to create any image from a set of noise.

By combining this with modern natural language processing, we can steer the AI towards creating a favorable end result based on a caption. And the best part? The entire process takes just a few seconds, which many people don't even believe is real.

Can machines create programs?

In a cruel twist of fate, programming - once considered the pinnacle of non-automatable industries because of the depth of thought necessary to write good programs - is actually one of the first domains automation is displacing humans.

Programming is writing, and if you train the aforementioned writing AI on code instead of English, it can learn to associate requests with functional programs that fulfill their intended purpose. Many organizations use AI programming tools like Codex or Copilot to write a significant percentage of their code, and whatever that percentage is, it means an equivalent amount of output not required from human programmers.

Of course, the complete automation of programming has yet to be 'solved', but over the coming years, more and more code will be written by machines - to the point where humans will write fewer programs than... well... programs.

Can machines have conversations?

It turns out conversations, too, can be handled by machines. In 2019, well ahead of their predecessors, Google unveiled Duplex, a realistic, human-indistinguishable conversation partner built to be a personal assistant. What the world saw at the Google I/O Keynote that day sent waves rippling throughout society.

Immediately after revealing this technology, Google received a fair amount of backlash. Nobody thought voice synthesis had come this far in so little time, and this was, to put it mildly, creepy as hell.

But since then, Google Duplex has been working steadily in the United States for the last three years. If you've handled phone calls for a restaurant, there's a reasonable chance you've actually spoken with a robot and not known it. And behind the scenes, this approach is likely already being used in sales, robocalls, customer service, and more.

Can machines solve complex problems?

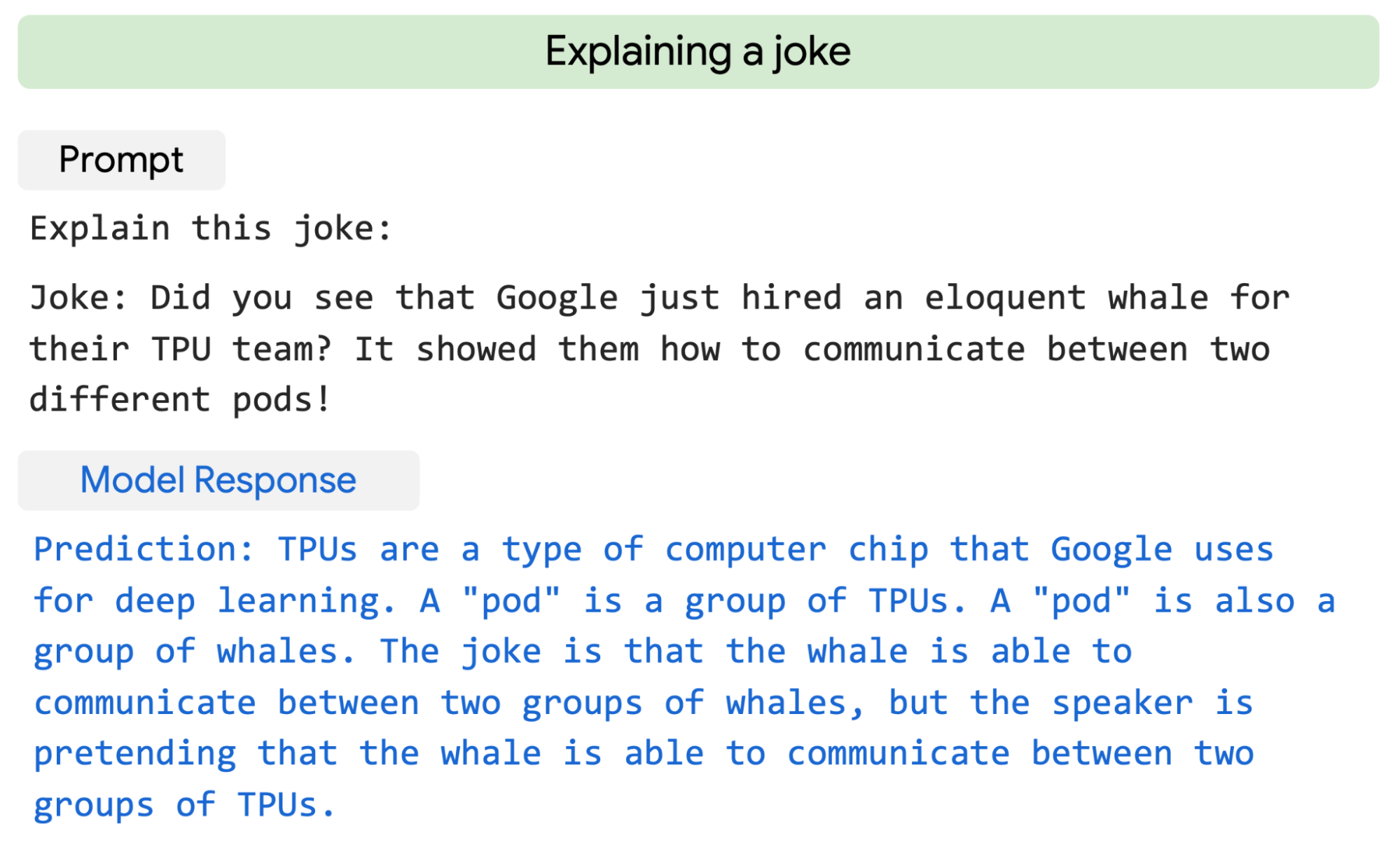

On April 3rd, 2022, Google released their Pathways Language Model (PaLM). Riding on the heels of the incredible advances in scale, PaLM is actually better than the average human being at solving most of the tasks it was tested on. Plus, it does it hundreds of times faster.

It can explain semantically complex jokes, translate code, write stories, and more. Though often compared to GPT-3, PaLM is in a league of its own.

In the future, machines will undoubtedly write, solve, and grade the problems used to teach humans in schools and universities around the globe. The script will be flipped; we'll be learning from machines rather than the other way around.

Can machines move?

Building machines that understand the real-world is significantly more difficult than ones that spend their time in a software-only environment. But despite this, machine movement has been growing increasingly complex over the last decade, mostly thanks to DARPA-funded organizations like Boston Dynamics.

Spot, for example, walks, runs, navigates terrain, and understands environmental conditions. What's more, it can carry non-trivial loads (one example shows a 14KG brick).

Can machines kill?

Though there are yet to be any recorded instances of real-world machines killing humans, I suspect it will happen in the next few years. Enterprising soldiers have already been mounting guns onto Spot-like robots, and the result is hair-raising.

Black Mirror: here is exactly what not to do

— Graeme Moore (@MooreGrams) April 13, 2022

Humans: shut up it’s gun mounted on robot dog time 🥳🥳🥳 pic.twitter.com/taOquSTOvw

How long it will take before they begin battle deployment en-masse is anyone's guess.

Can machines think?

So, machines can talk, write, create art, move, solve problems, and presumably kill. But can they think?

If, like many current AI critics, you believe that machines can't think, then you must also naturally assume that:

- Talking doesn't require thinking

- Writing doesn't require thinking

- Solving problems doesn't require thinking

- Creating art doesn't require thinking, and

- Moving or killing doesn't require thinking

Human exceptionalism

As machines grow increasingly complex, how we think about human exceptionalism (the idea that humans are innately different from all other beings) naturally retreats further and further. In 2055, will some answer 'can machines think' with 'yes' only if said thinking occurs in biological, carbon-based substrates?

At present, most people still believe thinking is a uniquely human activity. They'd say that what AI is doing isn't thought - it's just statistical pattern matching.

But consider that the inner workings of the human brain are no less worked out than what's going on inside AI right now. In both humans and machines, the processes from which an end result is derived (like what the next word is in a sequence) come from a black box.

We understand the low-lying principles behind humans brains and large AI models: how neurons fire signals, or how machine learning can maximize a particular parameter. But we can't peer under the hood and make sense of why. The emergence of complex, higher-level behavior has both neuroscientists and modern AI engineers stumped.

My point: there is no definitive line at which a hill turns into a mountain. Likewise, there's no critical boundary at which we go from simply maximizing a parameter to thinking, writing poetry, creating vivid artwork, or even falling in love.

Can machines think? If you don't yet believe they can, it might be worth re-examining why.