When I began 1SecondPainting, I knew AI had crossed a line we'd never be able to go back from.

Machines have always grown more capable with time. Yet I (and most others) had always thought their abilities would be relegated to simple, monotonous tasks.

Operating an assembly line, or putting together a car, for instance: these were the sorts of things AI was "for", and I had always been comfortable with that.

But the events of 2020 changed everything.

Within just a few months—a blink in humanity's long relationship with technology—modern artificial intelligence graduated. In June of that year, GPT-3 was released to the wider public. And in December, VQGAN, one of the most impactful advances in generative machine art, landed with a big splash.

For the first time, AI was reproducing human creativity. Art, design, and language, concepts traditionally thought of as possessing the essence of mankind, were no longer off the table. And very few understood why.

However, this pattern of increasing machine capability was actually first predicted almost 40 years ago. And we as a society can use it to help inform where we're going next.

Moravec's paradox

In the late 1980s, computer scientist and roboticist Hans Moravec proposed an interesting paradox.

Put another way, it's easy for computers to do the things humans find difficult (intelligence), and hard for computers to do the things we find easy (perception and mobility).

For instance, the ability to do higher-level mathematics is a hallmark of keen intelligence in humans. Most find it exceedingly hard, and it takes years of dedicated study to be able to solve even a fraction of the word problems in your average calculus textbook.

But machines can do any level of mathematics effortlessly. Whether it's linear algebra or differential geometry, it's never any more than simple arithmetic to them.

From computing to culture

This is a trope you're probably already familiar with: computers have always been great at... well, computing. That's why we made them in the first place.

But now, it goes much further than just mathematics: Moravec's paradox is beginning to apply to all intelligent tasks, regardless of domain. Including language, formal reasoning, and artistic creativity.

GPT-3 can already write better than the average university graduate; DALL-E 2 and Imagen are more adept than the average artist; and the litany of new language models like LaMDA and Flamingo are now solving complex reasoning problems that intelligent humans routinely struggle with.

In a few more years, it's likely that AI will be able to produce world-class art pieces, full-length books and whitepapers, and songs of near-infinite complexity. In short: machines will be in charge of furthering human culture, not other humans.

But why? How has AI managed to do something in just a few years that mankind has taken thousands to get right?

Humans are great at perception & mobility—but not much else

It's simpler when you look at it from the perspective of evolutionary history. Human brains spent a much larger time evolving for survival than we did for esoteric pursuits like logic, reasoning, or art.

Meanwhile, mobility—i.e walking, running, grabbing things, and balancing—are so easy for us that we don't even think about them. They occur subconsciously. Most of our brains have been optimized over millions of years to get them right, and one could say that humans were literally built for movement.

But writing the next Moby Dick, or reasoning through a difficult problem? These are relatively new in our historical timeline, so evolution hasn't had the time to build streamlined brain structures to take care of them. For that reason, they require an intense amount of thought, focus, and work.

So it's not necessarily that language, art, and meaning are difficult problems to master. It's more like they just seem that way to us, since humans have always approached them with a very limited set of tools. And when we provide problems like this to modern AI, it doesn't sense the intrinsic difficulty like we do, because, like Moravec believed, it's easy for computers to do the tasks we find hard.

Why robots struggle with sandwiches

At the same time, there are still some very simple things humans do that are exceedingly difficult for machines. Consider, for example, the not-well-defined task of making a ham and cheese sandwich.

A human being's "algorithm" for doing so involves 1) finding bread, 2) slapping it on the counter, 3) opening up the ham and cheese, and 4) assembling the ingredients. Then, you put everything else back in the fridge and get to munching.

Computers, on the other hand, have a very difficult time with tasks like this, and it's chiefly because of perception and mobility.

Let's pretend it's the year 2050, and a general "helper" machine has just been created to assist people with household tasks. Here's a slice of what it would have to think through to fix a ham and cheese sandwich:

- Where is the refrigerator relative to my body?

- How much resistance is expected when I try and open the door?

- What distance can I lean over without compromising my center of mass?

- How heavy, approximately, is the container that I'm reaching for?

- What sort of force would I have to apply while grabbing it to ensure it doesn't slip?

- What sorts of objects are inside? How will my movement impact them - if I move too quickly or with too much force, will I crush them?

- How hard should I place this on the counter?

.. and that's before even beginning to put it together!

It's taken a tremendous amount of engineering ingenuity just to get robots to walk, let alone do domain-general tasks like opening doors or making the aforementioned sandwich. Meanwhile, human children can accomplish them with zero to no effort.

This is at the core of what Moravec meant when he said it's "difficult or impossible to give [machines] the skills of a one-year-old when it comes to perception and mobility". Our minds handle an incredible amount of perception and mobility related information subconsciously, so much so that, to us, they're the easiest tasks in the world.

Economic considerations

This should have given you some sense as to why intelligence models—those focused on language and reasoning—have advanced so quickly relative to their robotic counterparts.

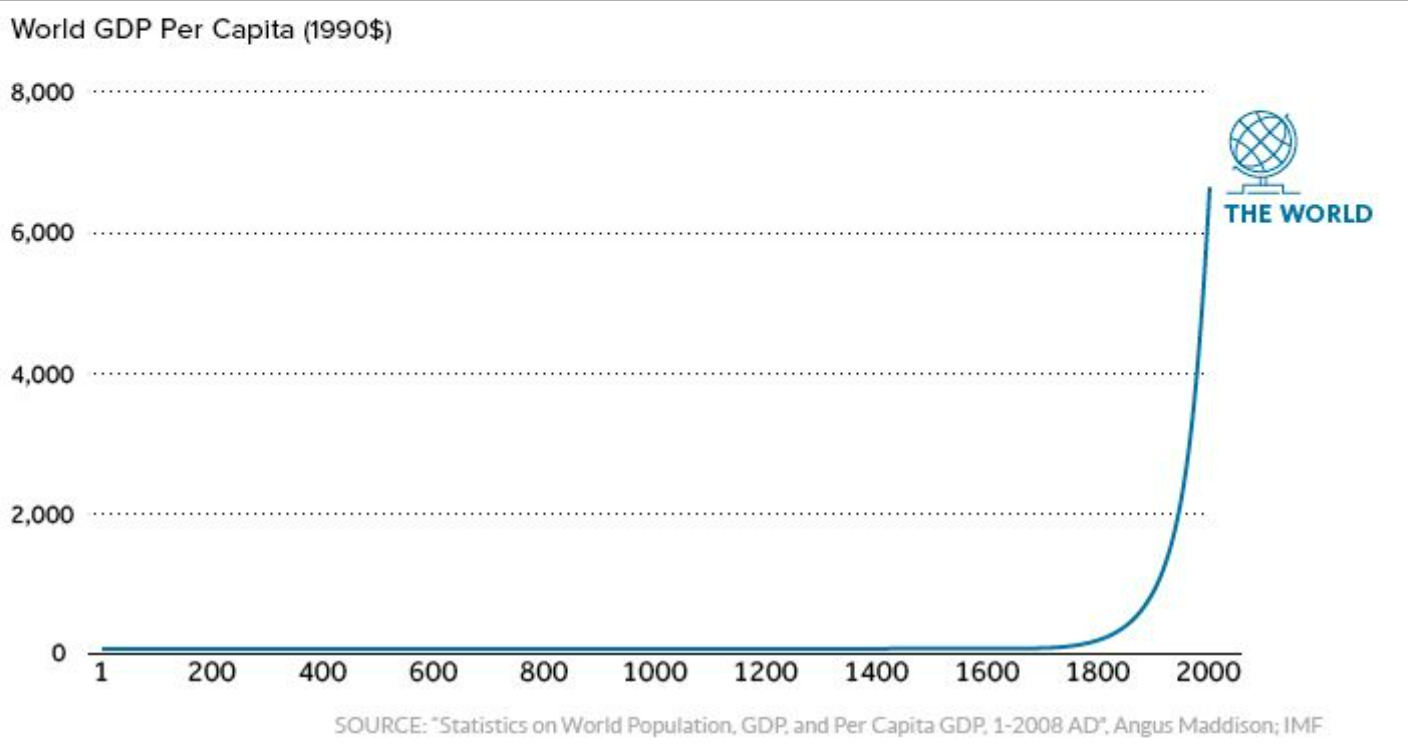

But it goes further than that. In addition to the question of scope, there are also large economic forces that are encouraging markets to solve intelligence quickly.

For one, it would be easily the most economically impressive feat in history. A generally capable language model with sufficient bandwidth would instantly 10x the financial value of any nation-state: all entertainment, negotiation, R&D, and business would be automatable, and the quality of each task would be orders of magnitude higher than what humans are capable of.

I've detailed why in previous posts, so I won't get into that here. But suffice to say, if you were to compare a superintelligent language model to the expected economic utility of a sandwich maker, for instance, it's clear which one a venture capital firms are going to pick.

Additionally, it's much easier to iterate with software than it is with hardware. The former can instantly deploy across millions of devices with just the click of a button; the latter needs physical upgrades, maintenance, and logistical considerations. These increase the cost, palatability, and subsequently difficulty.

Humans will turn to perception and mobility eventually. But not before intelligent tasks like language, art, and reasoning are mostly solved. And, by that point, it's more likely that AI will be spearheading the R&D.

Final thoughts

In short, Moravec's paradox is the reason why AI has advancing so rapidly in language and art. It's also the reason why it's lagged significantly behind our expectations in robotics and locomotion.

Given enough time, it's clear AI will eventually surpass human intelligence in every relevant domain. But in the meantime, remember that the skills that come most naturally to us are often the hardest for machines to learn—and plan for a near-future where that's the case.